Understand Transformer Paper Through Implementation

a detailed implementation of binary classification using transformer encoder

Introduction

In the recent years, transformer

Self Attention

Attention is a neural network layer that map sequence to sequence set to set. There are two kind of attention layer, self attention and cross attention, each can be a hard attention or soft attention. Suppose that $\{x_i\}_{i=0}^t$ a set of row vectors where $x_i^{\mathsf{T}}\in \mathbb{R}^d$, if $\{x_i\}_{i=0}^t$ is an input of self attention then the output is a set of linear combination

$h=\alpha_0x_0+\dots +\alpha_tx_t=aX$, where $a={\begin{bmatrix}

\alpha_1 & \alpha_2 & \dots & \alpha_t\\

\end{bmatrix}}^{\mathsf{T}}$

is a row vector called attention vector. When $a$ is one hot encoding then the layer is called hard attention, otherwise it is called soft attention and the total sum of elements in $a$ equals to $1$, in practice we represent attention vectors as matrix $A$, which each row of $A$ is attention vector. In this article to match the mathematical convention with the original transformer paper we will represent sequence of vectors as matrix where the vertical axis is the sequence length and the horizontal axis is the vector dimension as depicted in the following diagram.

The sequential order of attention layer input might lost during the computation, for instance let $V \in \mathbb{R}^{4\times3}$ , is a matrix represent sequence of four 3-dimensional (row) vectors, attention layer multiplies $V$ with an attention matrix $A$, if $A$ happened to be a permutation matrix we might lose the order information of $A$, to understand this please take a look at following illustration:

$$H = AV = \begin{bmatrix} 0 & 1 & 0\\ 0 & 0 & 1\\ 1 & 0 & 0\\ \end{bmatrix} \begin{bmatrix} {\color{teal}v_0}\\ {\color{orange}v_1}\\ {\color{red}v_2}\\ \end{bmatrix} =\begin{bmatrix} {\color{orange}v_1}\\ {\color{red}v_2}\\ {\color{teal}v_0}\\ \end{bmatrix}$$as you can see that $A$ permutes the rows of matrix $V$ which mean it permutes the sequence order, in other words attention ignore positional information, so attention maps set to set, however the transformer paper propose a method to enforce attention to map sequence to sequence by encode a positional information and inject it to input matrix which will be explained in the later section of this article.

Queries, Keys and Values

Transformer’s attention layer was inspired by key-value store mechanism, we usually find such mechanism in something like programming language data structure, for example python built-in dictionary, python dictionary has key-value pairs from which we can fetch a value by feeding a query to the dictionary, the dictionary then compare the query to each key if the query match a key it will return the value corresponding to that key, to mimic this behaviour, transformer’s attention layer transform input matrix $X$ into three entities; query, key, and value analogous to python dictionary. These entities are generated by transforming each row vector of input matrix with linear transformation, for instance to get a value vector $v$, multiply a row vector $x$ of $X$ with a matrix $W_{value} \in \mathbb{R}^{\text{input dimension}\times d_v}$, the same rule applies for key and query vector $$v=xW_{value}$$ $$k = xW_{key}$$ $$q=xW_{query}$$ It is easy to show above operation in matrix form as follow $$V=XW_{value}$$ $$K=XW_{key}$$ $$Q=XW_{query}$$ Lets get some intuition about this concept, suppose that $q$ is a query vector (a row of $Q$ matrix), $i^{th}$ element of attention vector $A$ is similarity value between the $q$ and the $i^{th}$ element of key matrix denoted by $k_i$, there are many ways to measure similarity between two vectors, one of the simplest form of similarity measure is dot product between $q$ and $k_i$, to compute dot product for each key vectors we can compute $qK^{\mathsf{T}}$ this mean we compute a query with every row of key matrix, furthermore to compute similarity between all query vector and all key vectors, simply calculate $QK^{\mathsf{T}}$.

As mentioned in the previous section each attention vector (row of attention matrix $A$) should sum to 1 as in probability distribution, to achieve this we can apply $\text{argsoftmax}(\cdot)$ in the element-wise manner for each row of $A$ as follow

$A=\text{argsoftmax}(\frac{QK^{\mathsf{T}}}{\beta})$ where $\beta$ is normalizing factor, the scaling factor is needed to make the variance stable as explained in the next section, to make $A$ hard attention we can replace $\text{argsoftmax}(\cdot)$ with $\text{argmax}(\cdot)$.

Then output of self attention is $H=AV$ a row of $H$ is $h=aV$ without losing generality let imagine that $a$ is a one hot encoding vector intuitively multiplying vector $a$ with matrix $V$ is choosing a row of $V$ then return it as $h$, when $a$ is not a one hot encoding it will “mix” some rows of $V$ then return it as $h$. The case where $a$ is a one hot encoding is almost identical with python dictionary meanwhile the softattention case is more like the flexible version of python dictionary, to better understand this let make an example, given a python dictionary :

to summarize this section the attention layer can be easily visualize through the following diagram

or in more compact diagram, attention layer will look like the following diagram

Scaled Argsoftmax

This section mostly will deal with the mathematical derivation of the scale factor of scaled argsoftmax which the original paper does not explain in detail, if you are already familiar with probability theory for specific with notions of variance and mean of a random variable then this section is safe to be skipped.

Large value of key vectors dimension ($d_k$) will cause high variance in $QK^{\mathsf{T}}$ which will cause a negative impact on training as the paper mentioned :

We suspect that for large values of $d_k$, the dot products grow large in magnitude, pushing the softmax function into regions where it has extremely small gradients. To counteract this effect, we scale the dot products by $\frac{1}{\sqrt{d_k}}$

However it is unclear why the scaler should be $\frac{1}{\sqrt{d_k}}$, original paper mention that the reason is:

To illustrate why the dot products get large, assume that the components of $q$ and $k$ are independent random

variables with mean 0 and variance 1. Then their dot product, $q \cdot k =

\sum^{d_k}

_{i=1}q_ik_i$, has mean 0 and variance $d_k$

The first time I read above phrase, it was not very obvious for me, why the variance of $q\cdot k$ is $d_k$ and also why the scaler is $\frac{1}{\sqrt{d_k}}$. In this section we will proof it mathematically

The original paper of transformer assumes that the readers have some degree of familiarity with basic probability theory, but if you are like me, not super familiar with probability theory here is some refresher. suppose that $X$and $Y$ are both identical and independent random variable, then derived directly from variance definition it is easy to show that

$$\begin{aligned}E[X^2]&=\text{Var}[X]+E[X]^2\\ E[Y^2]&=\text{Var}[Y]+E[Y]^2\end{aligned}$$Then $\begin{aligned}{\text{Var}[XY]} &= E[X^2Y^2]-E[XY]^2 \\ &= {\color{orange}E[X^2]}{\color{teal}E[Y^2]}-E[X]^2E[Y]^2\\&=({\color{orange}\text{Var}[X]+E[X]^2})({\color{teal}\text{Var}[Y]+E[Y]^2})-E[X]^2E[Y]^2 \\&=\text{Var}[X]\text{Var}[Y]+\text{Var}[X]E[Y]^2+\text{Var}[Y]E[X]^2+{\color{purple}E[X]^2E[Y]^2-E[X]^2E[Y]^2}\\&=\text{Var}[X]\text{Var}[Y]+\text{Var}[X]E[Y]^2+\text{Var}[Y]E[X]^2\end{aligned}$ Let assume that each row vector of $K$ and $Q$ has zero mean and unit variance. Suppose that $k,q$ is column vector of $K$ and $Q$ respectively then $k\cdot q$ is an element of $K^{\mathsf{T}}Q$ and let consider. $\begin{aligned}\text{Var}[k\cdot q]&= \text{Var}[\sum _{i=1}^{d_k}k_iq_i]\\&=\sum _{i=1}^{d_k}\text{Var}[k_iq_i]\\&=\sum _{i=1}^{d_k}\text{Var}[k_i]\text{Var}[q_i]+{\color{teal}\text{Var}[k_i]E[q_i]^2}+{\color{orange}\text{Var}[q_i]E[k_i]^2}\\&=\sum _{i=1}^{d_k}1+{\color{teal}0}+{\color{orange}0}\\&=d_k\end{aligned}$ Now elements of $QK^{\mathsf{T}}$ has zero mean and $d_k$ variance, the variance depend on the dimension of the key or query vector, this is unwanted behaviour since changing the dimension will also changing it’s variance too low variance will cause the argsoftmax output to be hard and vice-versa. We want to keep zero mean and unit variance, since $\text{Var}(\alpha X)=\alpha^2\text{Var(X)}$ then we should scale $QK^{\mathsf{T}}$ by $\sqrt d_k$ such that $\begin{aligned}\text{Var}[\frac{k\cdot q}{\sqrt d_k}]&=(\frac{1}{\sqrt d_k})^2Var[k\cdot q]\\&=\frac{d_k}{d_k}\\ &= 1\end{aligned}$

hence the attention matrix become $A=\text{argsoftmax}(\frac{K^{\mathsf{T}}Q}{\sqrt d_k})$

Positional Encoding

Remember in the previous section the input of attention layer might lost it’s sequential order information. Before diving into the method proposed by author to enforce attention layer to maintain its input posititional information, lets think of some possibilities that we could do to maintain the positional information of attention layer input.

- The naive solution is concatenating index to the input for instance ${[0,v_1], [1,v_2], … ,[n,v_n]}$ this could work but this has serious drawback when we normalize it the index value will be varied depending on the sequence length.

- Use binary number as index instead of decimal, this approach seem promising but still has flaw since the euclidean distance between two adjacent index is not consistent

The authors of paper attention is all you need propose a method using the following function $\text{PE} (pos,2i) = \sin\left({\frac{pos}{1000^{\frac{2i}{\text{d\_model}}}}}\right)$ $\text{PE} (pos,2i+1) = \cos\left({\frac{pos}{1000^{\frac{2i}{\text{d\_model}}}}}\right)$ this function is choose because it has desired mathematical properties, $PE(pos,2k+i)$ is a linear mapping from $PE(pos, 2k)$, so the distance between index is consistent, and also the embedding is not concatenated with the input instead it use element-wise addition, the author argument that empirically there is no much different between concatenating and element wise addition between positional encoding with the input and pointwise addition yield small memory footprint. In recent development there are other ways to inject positional information to transformer input, but it is out of scope of this article.

Multi Head Attention

Multihead attention layer is simply multiple copies of attention layer where each copy does not share it’s weight parameters, on the top of it we add concatenation and fully connected layer to merge back the shape to the original single head output shape

Build a Classifier Based on Transformer Architecture

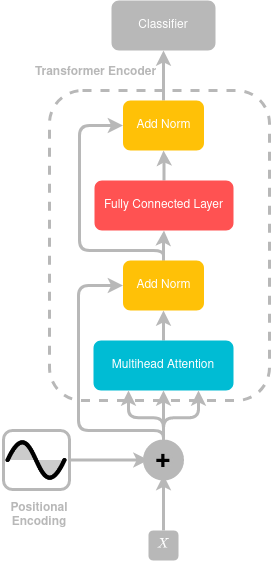

Now we have nuts and bolts needed to build our transformer architecture, time to put them together. In the original paper transformer consist of two parts encoder and decoder, but in this article we will not implement the decoder part, lets left it for next article, instead we will build the encoder part only of the transformer then add classification head on the top of it

as you can see from the diagram, we have skip connection or residual connection like the one that resnet has, its connect the pointwise addition positional encoding to add norm layer, add norm layer simply matrix addition and layer normalization.

Detailed Implementation

In this article we will not implement the sequence to sequence transformer like the one that demonstrated in the paper rather we will implement the simpler one; classification transformer that classify if a sentence has positive or negative sentiment on IMDB dataset. Implementing the sequence to sequence transformer like in the original paper need more effort since it also need us to implement beam search, I think i will try it in the next article

Generate Key, Query, Value Matrices

The first thing we should do is to generate Key, Query and Value matrix this can easily achieved by using

Split Head

Remember that our query from previous operation has the shape

why we should split the head? it is because we will compute softargmax along the horizontal axis to make the horizontal axis sum to 1, if we don’t split we will end up applying softargmax across all head,next what we want to achieve is to make attention vector to sum up to one for each single head. This operation can be done by using

Scaled Dot Product Attention

Implementing scaled dot product is pretty straight forward, but one thing should be noticed since the first and second axis of the key tensor is batch size and head size respectively, then the transpose should be done in third axis and fourth axis so it become

Now let update our multihead attention network and add scaled dot product to it dont forget to concat the result from

Feed Forward Network

This is a simple two layer multilayer perceptron with with ReLU activation in the middle which the input and output has size of

Positional Encoding

The size of the positional encoding will be

Encoder

Lets take a look at the encoder architecture from the original diagram from the author, we need multihead attention, positional encoding, layer normalization, and mlp.

Word Embedding

Now lets implement the part that add positional encoding to the vector encoding as subclass of

Encoder

Transformer Classifier

We will add simple linear layer on the top of transformer for classification, I will not put the entire code here the other part which is training loop and dataset loading was taken from Alferdo Canziani great lecture. You can to access my complete code

Conclusion

Implementing transformer is not trivial matter even when the authors say that idea behind transformer is simple but technical detail make the difficulty exponentially increasing.